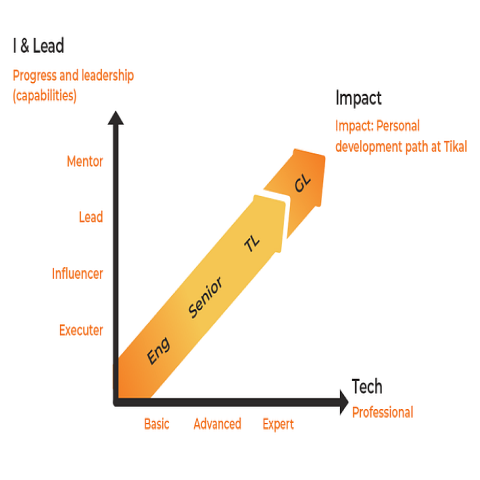

Chaim Turkel

Data & LLM Architect & Group Lead

Holds the position of a Group Leader mentoring fellow workers, and with clients as a Tech Lead to achieve their Technical & Business requirements. With 20 years of experience in the field from very diverse clients, Chaim brings his experience with a passion to solve new challenges in the field of distributed applications and data.

Portfolio

AI Architect

Designed and implemented an end-to-end AI pipeline that ingests couples-therapy session videos, transcribes them with diarization and speaker identification, and then applies cutting-edge LLM frameworks (e.g., LangGraph) to generate actionable insights for every session. The solution runs on Google Cloud Platform and features full monitoring and alerting, using tools such as LightLLM.

DBT Data Platform Architect

Designed and implemented a self-service data platform on Pandas, Polars, and Dagster, optimizing data workflows for research and development teams. Established industry best practices for table naming and data warehouse mapping. Developed an intuitive CLI tool to streamline model creation and built a seamless environment for running and updating data models, enhancing developer experience and efficiency.

LLM Architect

In my role as a Technical Lead Architect, I am actively engaged in the development of a Proof of Concept (POC) that encompasses a Large Language Model (LLM) Rag application. The project involves implementing semantic enrichment by leveraging a diverse set of technologies, including vector databases, elastic search, graph database, caching mechanisms, and other advanced tools. Additionally, the POC extends its scope to accommodate and support a large-scale application, emphasizing scalability as a crucial aspect of the architectural design.

My responsibilities includes orchestrating the integration of these technologies to not only enhance the application's semantic capabilities but also ensure its robust performance at scale.

Tech Lead Architect

Tech Lead Architect on a project to overhaul an automotive data processing platform for car sensor data. The new platform has been designed with data mesh principles in mind, and has revamped its orchestration using the Arg workflow system, replacing Airflow. This updated platform is expected to support thousands of on-demand pipeline runs with terabytes of data, simplifying the use of Argo terminology and configuration so that developers can concentrate on their components.

Part of our data mesh initiative involves implementing a comprehensive CI/CD pipeline for deploying to various environments on AWS.

It's important to mention that the development of this platform has been a collaborative endeavor, with teams collaborating across multiple continents around the world.

BigData Architect

Tech Lead Architect a state of the art data platform. The platform supports meta data definitions from the ingestions of the data and through the transformations of the data using DBT. Using data mesh concepts to enable teams across the company to generate value. Technologies used are snowflake, argo workflow.

BigData Architect

Design and implement a state of the art data platform self service. The platform is based on DBT, with databricks the underlying data warehouse, using hive meta-datastore. By leveraging python, we created a zero configuration development environment including docker on top of codespace. In addition to standard data mesh practices we build a generated orchestration platform to generate dbt dags on top of airflow with built in alerts per dag.

Data Platform includes the ability to export data and metadata from the system to external systems like looker and segment.

Sentinalone

BigData Architect

Design and implement data pipeline to process millions per minute for integrating systems, using spark operator on k8s and Kafka. Design & Accountability from design to full automation of pipeline using Jenkins, Helm, Pulumi.

Design and implement BI platform on redshift. Create Data Pipelines to bring data from multiple sources (Kafka, Postgres, and more) and multiple regions to a single location in redshift so that BI analysts can use the data to understand costs and other insights. Includes scheduled batch processing and streaming applications.

Behalf

BigData Architect

Design and implement data pipeline to process millions per minute for integrating systems, using spark operator on k8s and Kafka. Design & Accountability from design to full automation of pipeline using Jenkins, Helm, Pulumi.

Design and implement BI platform on redshift. Create Data Pipelines to bring data from multiple sources (Kafka, Postgres, and more) and multiple regions to a single location in redshift so that BI analysts can use the data to understand costs and other insights. Includes scheduled batch processing and streaming applications.

Behalf

Microservice Scale Architect

Design and implement scale scraping of data from cloud providers (AWS, Azure...) using technologies of microprofile with quarkus implementation. Design infrastructure for all scrappers using reactive programming. Design infrastructure for storage of data on elastic search. Enhance enrichment of data using Flink.

Behalf

BI Platform Architect

Design and implement data lake on top of Google cloud storage. Architecture included data lake as a data interface to all R&D projects including different data sources, such as Salesforce, MongoDB, Postgres, and others. Use Google Dataflow for ETL to bring and process data from Salesforce, MongoDB to BiggQuery.

Build warehouse on top of BigQuery using Airflow as an orchestrator.

Thomson Reuters

Data Architect & Implementer

Design and implement an ETL pipeline to stream data from Salesforce to Bigquery using apache beam on top of google dataflow. The task included understanding the amounts of data, and the API's that salesforce supports. The delta changes need to be transferred every 15-30 minutes across all tables, with configuration options to add tables and columns in the future without code changes. Apache Beam on top of dataflow was chosen as the data pipeline for its abstract SDK for processing data. Google App-Engine was used as the scheduling mechanism, and Google app script as the monitoring dashboard.

Thomson Reuters

Java Distributed Architect

Help a startup to re-architecture a monolithic application to a micro-service platform. This included breaking up an application to multiple services with Kafka as the communication bus. Help redesign application for scale by introducing rx-java in the software tier along with clustering of servers, and Cassandra as a backend database for scaling data. Help introduce more functional style programming using javaslang framework On the DevOps side, I helped create the Cloud-formation structure on top of AWS for clustering of servers

FIS

Java Distributed Architect

Build architecture for an application that downloads and parses documents from multiple sources. Support for big load using Spring Boot architecture in the Scala language on top of Akka for concurrency. The application was then packaged in Docker. Tech leader on a legacy product written in scala for text NLP analysis. Add framework for streaming data to application Lucene indexes from server databases.

FIS

Java Developer

WSF - Dynamic platform for web service creation for customization teams. Supplying tools for customization team using maven archetypes and CLI tools for WSDL generation. Application Server framework based on spring CXF & security. AMF - Monitoring platform for Amdocs applications. Added support for real-time history streaming via graphite & elastic search. Using zookeeper for configuration & cluster synchronization.

FIS

Java Developer

Support legacy database with spring & hibernate framework. Due to the legacy issue job involved deep diving with hibernate. This included creating smart hibernate filters per stack level. All SOAP support via dozer framework to reduce code duplication. Rest API versioning. Rest API documentation via enunciate. Generate generic framework for the internal protocol using jxpath, javaassit

Latest Articles

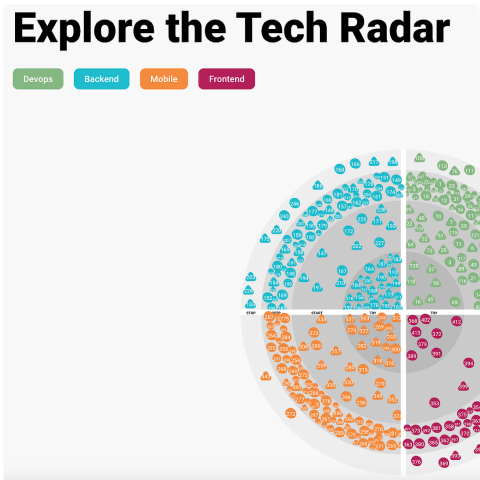

How We Predict Future Tech Trends with the Tech Radar

Starting a Roadmap Program in Your Organization